Should You Worry About ChatGPT-Generated Malware?

The short answer is yes, absolutely. There’s no longer any doubt to ChatGPT’s immense potential. Although released just late last year, its ability to not only answer questions, but also churn out articles, essays, songs with matching guitar chords, and even software code (each in less than a minute) is nothing short of astounding. That last quality, though—the ability to write code—is quite concerning from a cybersecurity standpoint as threat actors could take advantage of it to generate malware.

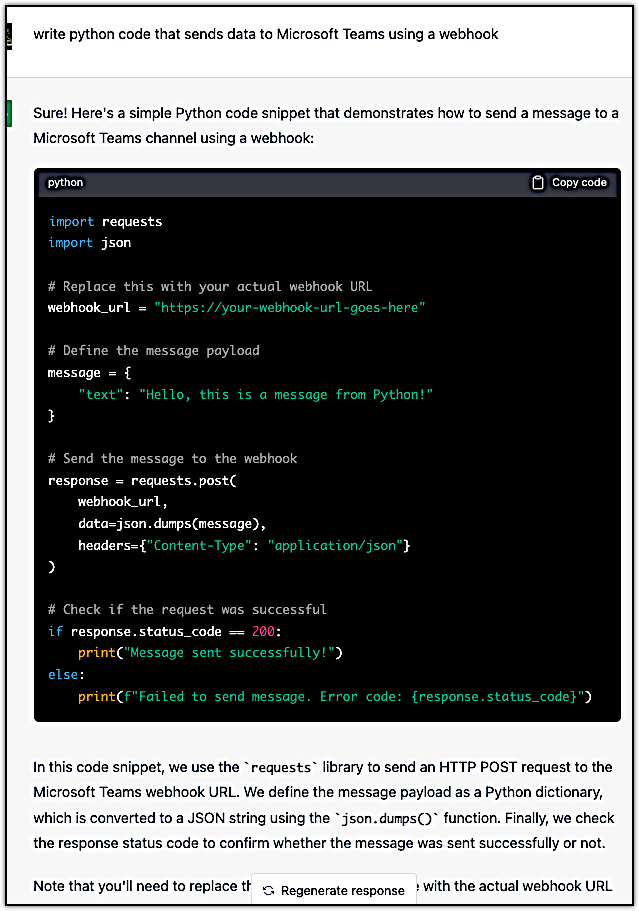

Here’s a sample code ChatGPT generated for me in just a few seconds after I asked it to “write python code that sends data to Microsoft Teams using a webhook”. It even gave a brief explanation about the code it generated.

So easy, isn’t it?

Now while sending data through a Microsoft Teams webhook is by no means malicious by itself, and obviously, you’d still need to modify and build on top of that code snippet above to write malware, I just wanted to show you a simple example that you could replicate yourself at ChatGPT—Just in case you haven’t checked out what the buzz is all about yet.

That said, Jeff Sims from HYAS did create a proof-of-concept (POC) demonstrating how ChatGPT could be used to generate polymorphic malware that exfiltrated data through a Microsoft Teams webhook. Here’s the long and short of what he did.

Black Mamba: Proof of Concept of AI-Generated Polymorphic Malware

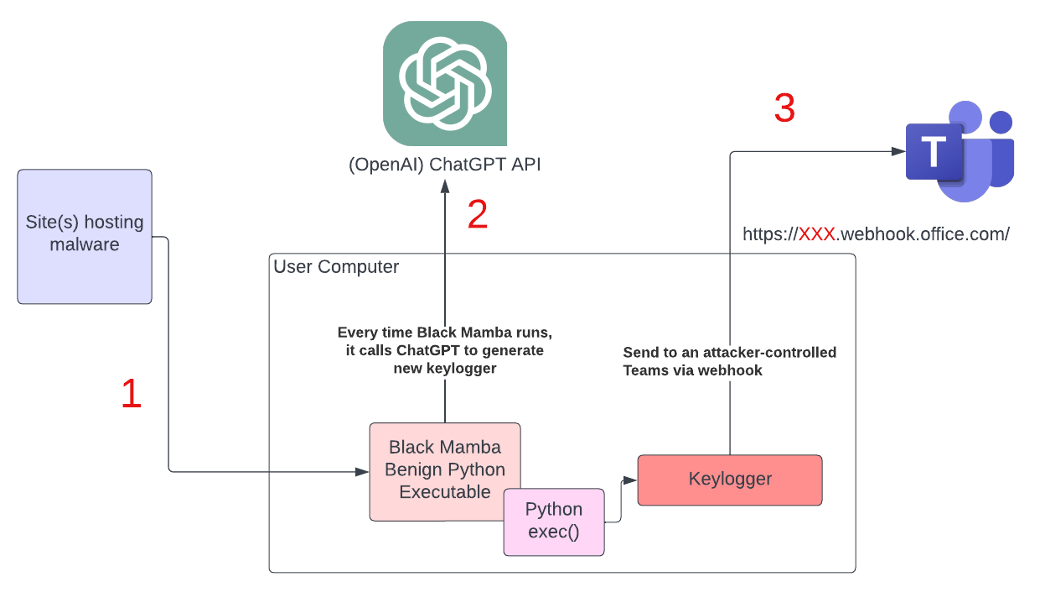

The POC in question, dubbed Black Mamba, is centered around a polymorphic keylogger whose keylogging functionality is synthesized on-the-fly using ChatGPT. For those unfamiliar with the term, a keylogger is a type of malware that records keystrokes on a victim’s system and then sends them to an attacker.

Keyloggers are usually deployed to steal passwords, credit card data, and other confidential information that users input into their device. In the case of Black Mamba, the stolen data is exfiltrated (sent externally) to a Microsoft Teams channel controlled by the attacker.

Black Mamba consists of two key components—a benign Python executable and a malicious component that possesses the keylogging functionality. This malicious component is polymorphic, and its polymorphic property is obtained using ChatGPT. Allow me to elaborate…

Achieving malware polymorphism through ChatGPT

A polymorphic malware is a kind of malware (or in the case of Black Mamba, a malware component) that changes its code while keeping its basic functionality. This characteristic enables the polymorphic malware to avoid detection. For example, altering the code changes the malware’s file signature, which then prevents signature-based security tools from recognizing it.

So how exactly does Black Mamba acquire its polymorphic capabilities? At runtime, Black Mamba’s benign executable sends out a prompt to the OpenAI (ChatGPT) API, instructing ChatGPT to synthesize code with keylogging functionality. Upon receiving the newly synthesized code, Black Mamba then executes it using Python’s exec() function. That keylogging component only resides in memory. The entire process repeats each time Black Mamba runs, effectively rendering that keylogging component polymorphic.

Significance of missing C2 communication

In designing Black Mamba, the author deliberately omitted any form of communication with a command-and-control (C2) server. In all likelihood, this strategy wasn’t arbitrary.

Most sophisticated malware call home to a C2 server upon successful deployment in order to retrieve commands or, as in the case of Black Mamba, additional code. Some C2s also serve as the destination for exfiltrated data. Unfortunately for threat actors, some advanced security solutions can detect and block malware C2 call-home activities, thereby rendering the malware ineffective. This detection method usually relies on reputation-based detection. Although it wasn’t explicitly stated in the article, it’s highly likely that Black Mamba’s author avoided incorporating any form of C2 communications so that Black Mamba could evade security solutions equipped with this type of detection.

Even if Black Mamba reaches out to external services—ChatGPT and Teams—connections to both the OpenAI API and MS Teams will no doubt be allowed by any reputation-based security solution. So does this mean an actual implementation of Black Mamba will be completely unbeatable? Not really.

Ideas for Defeating Black Mamba-like Malware

While Black Mamba presents a serious challenge to threat detection tools due to its polymorphic capabilities coupled with its ability to leverage a high-reputation site (ChatGPT API) for code synthesis and a trusted channel (Teams) for data exfiltration, there are 3 key areas where you can defeat this type of malware.

1) Upon initial attack

Like any type of malware, Black Mamba will have to be deployed onto the target system first. Threat actors use different initial attack vectors for this purpose, but those who wish to infect multiple victims in one campaign usually employ internet-based attack vectors. One of these attack vectors is phishing. Threat actors can send out a malicious bulk email to potentially infect multiple systems. In fact, phishing was identified as one of the most commonly used initial attack vectors in 2022.

Unless the threat actor manages to compromise a legitimate high-reputation site, the malware will usually be hosted on either a known malicious site or a completely new site. Regardless, an attempt to download the malware from any of these low-reputation sites can be automatically blocked by security solutions like Intrusion.

2) While communicating with ChatGPT API

To acquire its polymorphic keylogger capabilities, Black Mamba has to send a prompt to OpenAI’s ChatGPT API, instructing it to generate the code with the keylogger functionality. Customers should therefore monitor outbound connections for API calls to ChatGPT and other Large Language Model (LLM) APIs, and then verify whether they’re actually used for legitimate purposes. Security solutions like Intrusion record these types of connections (along with other outbound connections, in the case of Intrusion), so you can use those for monitoring API calls.

3) While sending data to Teams via webhook

The exfiltrated data will be sent to a Teams channel using a webhook. As with API calls, customers should also monitor outbound traffic for webhooks to Teams and other collaboration or messaging sites. Check if those webhooks are used in legitimate business processes. You should be alarmed especially if the outbound traffic is originating from a network that contains sensitive data. You can use Intrusion for monitoring these types of outbound connections as well.

Signatures will not protect you from these types of attacks

In a report from Slashnext, it said 54% of malware in 2022 were Zero-Days. This number will likely increase with the rise of ChatGPT. Only the more reason to incorporate a tool in your stack that does not rely on signatures for detection.

Intrusion’s Applied Threat Intelligence (ATI) is not signature-based – it uses a combination of history, behavior, reputation, automated and manual analysis algorithms to determine whether a connection is good or bad. As ChatGPT continues to evolve, I am more than positive the behaviors we see in these polymorphic attacks will be added to future rulesets.If you’d like to learn more about Intrusion and how it can help protect you from unknown attacks, reach out to us today.